Top 10 advantages of modernizing your apps using IBM Power10. Application modernization happens in...

Red Hat OpenShift Integration with other Applications for SAP

It is commonly known that the integration of Red Hat OpenShift for SAP landscapes tend to be extremely complicated, in addition to being essential to the operation of multinational corporations. This is due to the high number of various programs that normally make up an SAP installation base (such as ERP, BW, GRC, SCM, and CRM), as well as the fact that these products frequently interact with other third-party applications to build a wide ecosystem.

A wide range of applications accesses this data since the SAP landscape includes information on HR, finance, supplier change, customer relationships, business warehouse, and many other areas. This results in heterogeneity, which developers and architects may find difficult to manage, particularly when they have to specify connections between the SAP estate and the apps that must connect to it.

The Enterprise Service Bus (ESB) or Enterprise Application Integration (EAI) patterns have always served as the foundation for the traditional method of developing and utilizing these integrations. Both models can be used to build integration platforms, however, one of their main problems is a lack of integration abstraction. Because of this, it is highly challenging to develop reusable components for various applications, leading to a view that is neither portable nor simple to maintain. These patterns are used by SAP’s classic integration platform, SAP PI/PO (formerly known as SAP XI).

Due to their nature, ESB and EAI patterns aren’t appropriate for working with cloud-based applications. The way businesses interface their applications with the SAP backbone needs to change because cloud-native deployment is an obvious developer trend. Additionally, microservices are becoming incredibly common since they offer a lot more modular approach and, because processes are divided into smaller, more manageable pieces, if one of them fails, the entire process will be discarded.

All of these qualities are covered by the API-First strategy to integration, which emphasizes reusability, abstraction, generalization, and portability. This starts by defining the APIs that will be used to call the necessary functions, and once that is done, the actual code is developed. Given that they are just given sets of APIs, this model is quite user and developer helpful. They only need to write calls from the application or code being written because the implementation details have been abstracted away. This improves portability between platforms, allowing us to use an integration on-premises or on a different cloud from the one it was originally built on without having to redefine it.

When taking into account the requirement to transition to SAP S/4HANA by 2027, the value of employing an API-first integration platform becomes even more apparent. One of its ideas is to “keep the core clean,” which refers to the idea that no custom code should be developed in the system’s core (what made the SAP version upgrades cumbersome). To adhere to this, users must transfer all of their existing installations outside (as well as create new ones outside), while continuing to connect to and communicate with the SAP core.

Even clients who haven’t started this migration yet can profit from removing their custom code from SAP (they’ll probably find a lot of stuff that they can get rid of because it’s no longer needed), and putting it somewhere else. As a result, migration may go much more quickly and simply.

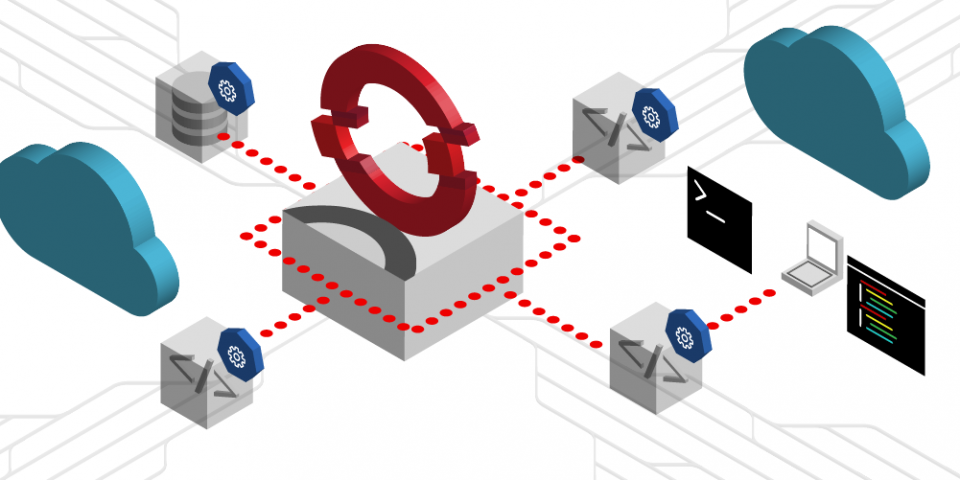

A high-level representation of an API management platform for SAP is shown in Figure 1, along with some of the primary reasons for customers to build and make use of it.

Figure 1. API management platform for SAP

The different applications that use data from SAP are hosted on satellite systems, so they do not need to be aware of the specifics of the data’s or SAP systems’ structures.

Implementing the solution of Integrating Red Hat Openshift

Customers may move all of their legacy apps to Red Hat OpenShift using the integration platform, and they can deploy all of their new applications on Red Hat OpenShift clusters. This goes well with the requirement for application development modernization, another ongoing trend.

The collection of technologies that Red Hat Integration offers enables the integration of SAP and non-SAP applications. Red Hat Fuse, which is built on Apache Camel and features specific elements for SAP that utilize its main protocols, is one of the main technologies used in this solution (RFC, OData, iDocs, etc.). It performs the conversion between the formats used by various third-party programs and those used by SAP, as well as exposing the SAP functionalities and data structures as APIs.

Red Hat 3Scale, which acts as an API gateway and is the single point of contact for the apps that must communicate with SAP, is the second key component. Role-Based Access Control (RBAC) is added to ensure that only applications that are authorized to access the APIs will call them. It maintains an inventory of all the APIs required throughout the whole IT landscape. It also enables you to monitor how each API is being used.

Want to know more about Red Hat OpenShift? Visit our course now.

Figure 2. Integration possibilities with Red Hat Fuse and Red Hat 3Scale

Some uses for the platform for managing APIs

Let’s analyze a few cases when an API-based integration platform has been used.

The first instance is when an application needs to use SAP to do a task, such as getting a purchase order’s specifics (PO).

The application will establish a connection with the API gateway and ask to utilize the API to get the PO’s data. If allowed, the API gateway (Red Hat 3Scale) will send the API request together with the application-provided parameters to the data integration component (Red Hat Fuse). Data integration will translate the application’s request into SAP format and establish a remote function call (RFC) connection with the SAP system. Executing a business API will then start the process to retrieve the PO (BAPI). Following that, the data will be returned to the calling application over the API gateway.

Figure 3. Application invoking an SAP function

An application that has to directly access a data structure in the SAP backend represents the second use case. It will connect to the API gateway and call the required API to accomplish this. The call will then be passed on to data integration, which will conduct another security check to determine whether the calling application has access to the SAP client from which it is attempting to access the data. If the application is permitted to use the API, it will then be passed on to data integration (to implement this check, an additional database with authentication tables has been deployed on Red Hat Openshift). If so, an OData conversion will take place before sending the query to SAP. Data integration will then use the API gateway to return the data to the calling application after it has been retrieved.

Figure 4. Application retrieving data from SAP

Applications that usually use data from an SAP BW system for business analytics are another interesting use case. Adding a cache functionality to avoid processing bottlenecks in the SAP backend is a smart solution because the queries used in this type of system are expensive and can result in performance concerns.

Another use case entails the exchange of files and messages across programs that are the source (non-SAP) and the target (SAP), like the one they have employed at Tokyo/TEL.

The apps make use of the JDBC/ODBC connector that comes with Red Hat Integration, which enables direct access to SAP systems by utilizing the Python OData library, in this scenario instead of the API gateway to demonstrate a simpler approach. If the desired data is already there in a caching PostgreSQL database that has been set up on the Red Hat OpenShift cluster, the application will first check that it is there before sending the request to the connector. If so, it will return the data to the application; if not, the query will be transmitted to the SAP BW system in OData format. The outcome is then returned to the program after being saved in the database.

Figure 5. The application used cached data from the SAP BW system

The usage of Red Hat Fuse to replace ad hoc, non-portable old batch procedures for file sharing is the last situation worth addressing. In this situation, Red Hat Fuse will handle all file manipulation required so that SAP and third-party apps can transmit and receive files in their native formats without the need for complicated logic that is only suitable with a small number of workflows.

Figure 6. Example of batch process to process invoices that can be transformed using APIs in Red Hat Fuse

Summary

For customers who are still using legacy SAP systems and are getting ready to migrate to SAP S/4HANA as well as for those who have already made the transition, integrating SAP and non-SAP apps is an important thing. In both situations, the SAP backend must communicate with numerous different apps, and given that this number is expanding quickly and that more flexible and agile deployment is required, they require a platform that is simple to maintain and can offer any kind of integration.

Both of these situations are covered by Red Hat Integration on OpenShift. Because it is built on APIs, it offers a mechanism to build reusable, simple-to-maintain connections between SAP and non-SAP applications. Additionally, it offers a platform for the creation and operation of all SAP-connected apps, enabling the adoption of cloud-native development practices and microservices. This will enable businesses to be much more flexible and shorten their development cycles.

Do you want to Integrate Red Hat OpenShift with other Applications like SAP? Visit us here.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective training programs that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com