NVIDIA GPUs are powering the next generation of trustworthy AI in a secure cloud - Course Monster Blog

By democratizing access to scalable computation, storage, and networking infrastructure and services, cloud computing is enabling a new era of data and AI. Organizations can now collect data on an unprecedented scale and utilize it to train complicated models and produce insights thanks to the cloud.

While the increased need for data has opened up new opportunities, it has also raised privacy and security issues, particularly in regulated areas such as government, banking, and healthcare. Patient records, which are used to train models to assist physicians in diagnosis, are one area where data privacy is critical. Another example is in banking, where models used to assess borrower creditworthiness are being developed using more comprehensive information such as bank records, tax filings, and even social media profiles. To guarantee that this data remains private, governments and regulatory organizations are enacting strict privacy rules and regulations to control the use and sharing of data for AI, such as the General Data Protection Regulation (GDPR) and the planned EU AI Act.

Commitment to a confidential cloud

Microsoft acknowledges that trustworthy AI necessitates a trustworthy cloud—one with built-in security, privacy, and transparency. Confidential computing is a critical component of this vision—a collection of hardware and software capabilities that provide data owners with technical and provable control over how their data is shared and utilized. Confidential computing is based on trusted execution environments, a novel hardware abstraction (TEEs). Data in TEEs is encrypted not just at rest or in transit, but also while in use. TEEs also provide remote attestation, which allows data owners to remotely validate the setup of the TEE’s hardware and software and authorize specified algorithms access to their data.

Microsoft is dedicated to creating a confidential cloud, where secure computing is the default for all cloud services. Azure now provides a rich confidential computing platform that includes various types of confidential computing hardware (Intel SGX, AMD SEV-SNP), core confidential computing services like Azure Attestation and Azure Key Vault managed HSM, and application-level services like Azure SQL Always Encrypted, Azure confidential ledger, and confidential containers on Azure. However, these options are restricted to the use of CPUs. This presents a problem for AI workloads, which rely significantly on AI accelerators such as GPUs to deliver the speed required to handle enormous volumes of data and train complicated models.

The Microsoft Research Confidential Computing group identified this problem and proposed a vision for confidential AI-powered by confidential GPUs in two papers, “Oblivious Multi-Party Machine Learning on Trusted Processors” and “Graviton: Trusted Execution Environments on GPUs,” which we share in this post. We also go through the NVIDIA GPU technology that is assisting us in realizing this goal, as well as the partnership between NVIDIA, Microsoft Research, and Azure that allowed NVIDIA GPUs to become a part of the Azure confidential computing ecosystem.

Vision for confidential GPUs

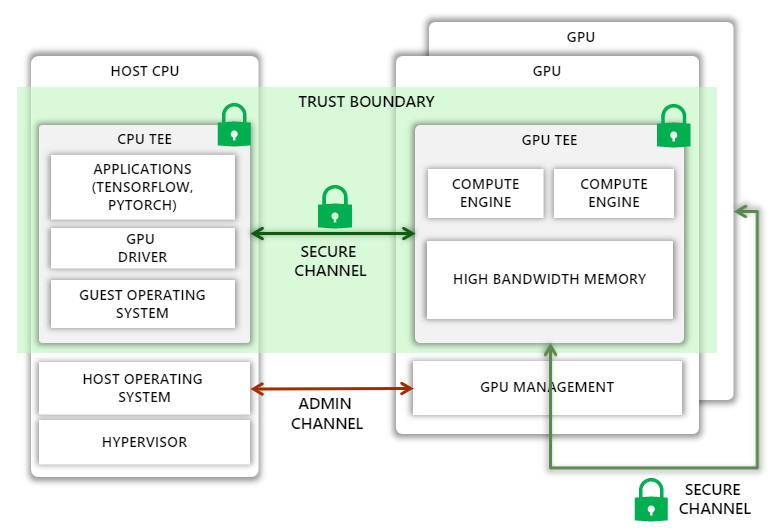

TEEs may now be created using CPUs from companies like Intel and AMD, which can isolate a process or an entire guest virtual machine (VM), essentially removing the host operating system and the hypervisor from the trust barrier. The goal is to extend this trust barrier to GPUs, allowing CPU TEE programs to safely offload computation and data to GPUs.

Unfortunately, expanding the trust boundary is not simple. On the one hand, we must protect against a variety of attacks, such as man-in-the-middle attacks, in which the attacker can observe or tamper with traffic on the PCIe bus or an NVIDIA NVLink connecting multiple GPUs, and impersonation attacks, in which the host assigns an incorrectly configured GPU, a GPU running older versions or malicious firmware, or one lacking confidential computing support for the guest VM. Meanwhile, it must guarantee that the Azure host operating system has sufficient authority over the GPU to carry out administrative operations. Furthermore, the additional security must not impose major performance overheads, raise thermal design power, or need significant modifications to the GPU microarchitecture.

According to the research, this vision may be accomplished by equipping the GPU with the following capabilities:

- A new model in which all important GPU state, including GPU memory, is isolated from the host.

- A hardware root-of-trust on the GPU chip that can provide verifiable attestations capturing the GPU’s entire security-sensitive state, including all firmware and microcode.

- GPU driver extensions for verifying GPU attestations, establishing a secure communication channel with the GPU, and transparently encrypting all communications between the CPU and GPU.

- All GPU-GPU connections over NVLink have transparently encrypted thanks to hardware support.

- Support for securely attaching GPUs to a CPU TEE in the guest operating system and hypervisor, even if the contents of the CPU TEE are encrypted.

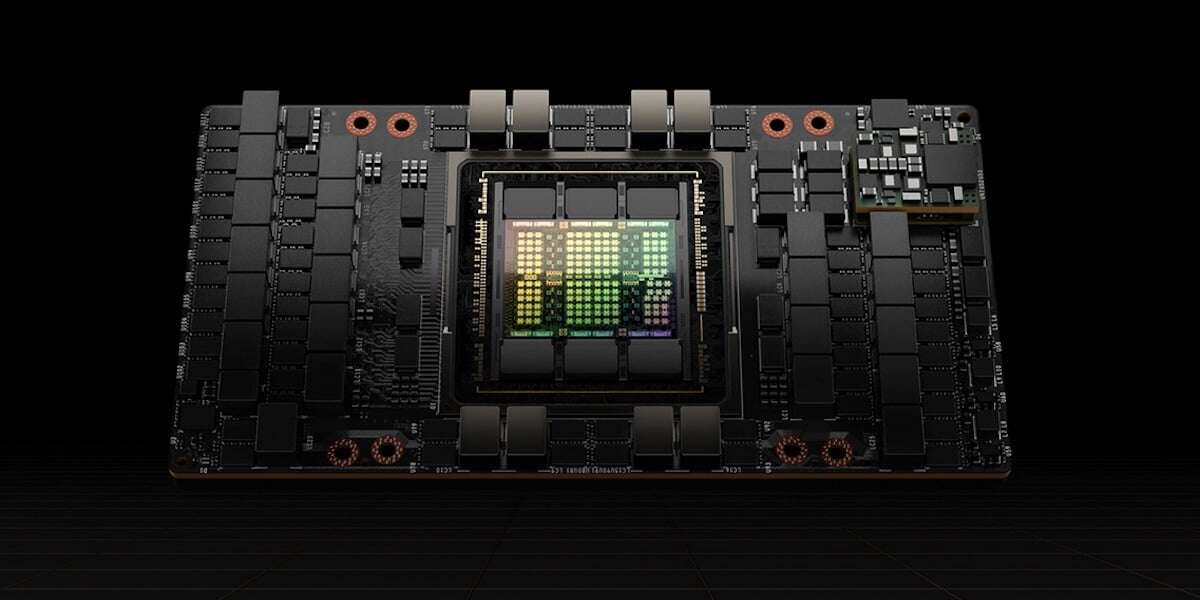

Confidential computing with NVIDIA A100 Tensor Core GPUs

With a new technology called Ampere Protected Memory (APM) in the NVIDIA A100 Tensor Core GPUs, NVIDIA and Azure has made a critical step toward fulfilling this ambition. We detail how APM enables secret computing within the A100 GPU to ensure end-to-end data privacy in this section.

APM introduces a new confidential mode of execution in the A100 GPU, which marks a region in high-bandwidth memory (HBM) as protected and helps prevent leaks through memory-mapped I/O (MMIO) access into this region from the host and peer GPUs. Only authenticated and encrypted transmission to and from the area is authorized.

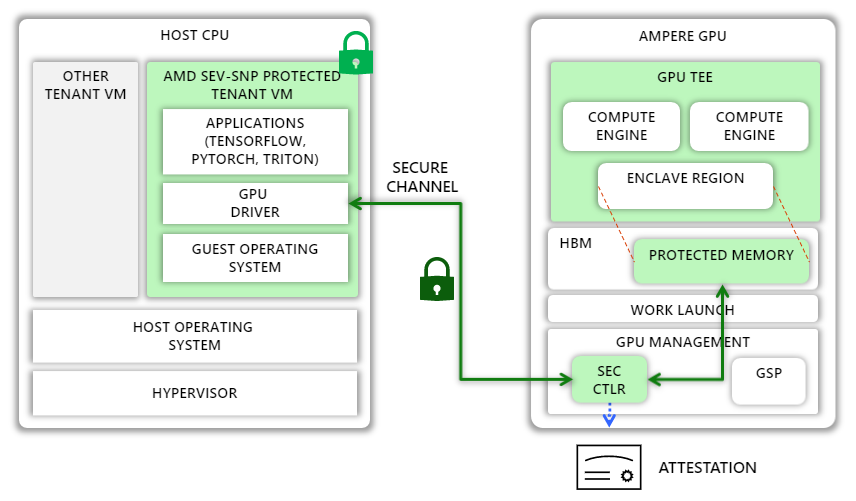

The GPU can be paired with any external entity, such as a TEE on the host CPU, in confidential mode. The GPU features a hardware root-of-trust to enable this pairing (HRoT). NVIDIA assigns the HRoT a unique identification and a related certificate throughout the manufacturing process. The HRoT additionally provides authenticated and measured boot by measuring the GPU’s firmware as well as the firmware of other microcontrollers on the GPU, including a security microcontroller known as SEC2. In turn, SEC2 can issue attestation reports that incorporate these measurements and are signed by a new attestation key that is validated by the unique device key. Any external party can use these reports to confirm that the GPU is in confidential mode and running the latest known good firmware.

The NVIDIA GPU driver in the CPU TEE checks if the GPU is in confidential mode when it boots. If this is the case, the driver requests an attestation report and verifies that the GPU is a genuine NVIDIA GPU with known good firmware. Once authenticated, the driver opens a secure channel with the SEC2 microcontroller on the GPU, employing the SPDM-backed Diffie-Hellman-based key exchange protocol to generate a new session key. When that exchange is finished, the GPU driver and SEC2 both have the same symmetric session key.

The GPU driver encrypts all subsequent data transfers to and from the GPU using the shared session key. Because CPU TEE pages are encrypted in memory and therefore unreadable by GPU DMA engines, the GPU driver creates pages outside the CPU TEE and writes encrypted data to those pages. The SEC2 microcontroller on the GPU is in charge of decrypting the encrypted data sent from the CPU and transferring it to the protected zone. Once the data is in cleartext in high bandwidth memory (HBM), GPU kernels can freely use it for computation.

Accelerating innovation with confidential AI

The introduction of APM is a significant step toward attaining greater use of secure AI in the cloud and beyond. APM is the fundamental building piece of Azure Confidential GPU VMs, which are currently in private preview. These VMs, developed in conjunction with NVIDIA, Azure, and Microsoft Research, have up to four A100 GPUs with 80 GB of HBM and APM technology, allowing customers to run AI workloads on Azure with increased security.

However, this is only the beginning. We are excited to take our partnership with NVIDIA to the next level with NVIDIA’s Hopper architecture, which will let clients ensure the confidentiality and integrity of data and AI models in use. We think that secret GPUs can allow a secure AI platform on which many businesses may collaborate to train and deploy AI models by pooling sensitive information while maintaining complete control over their data and models. A platform like this can unleash the value of enormous volumes of data while protecting data privacy, allowing enterprises to drive innovation.

Bosch Research, the company’s research, and advanced engineering branch is building an AI pipeline to train models for autonomous driving. Personal identifying information (PII), such as license plate numbers and people’s faces, is common in most of the data it employs. Simultaneously, it must comply with GDPR, which needs a legal justification for processing PII, namely data subjects’ consent or legitimate interest. The former is difficult since it is nearly hard to obtain consent from pedestrians and drivers captured by test cars. Relying on real interest is also difficult since it involves demonstrating, among other things, that there is a less invasive manner of attaining the same purpose. This is where confidential AI shines: Using private computing may help decrease risks for data subjects and data controllers by restricting data exposure (for example, to specified algorithms), allowing businesses to train more accurate models.

Microsoft Research is dedicated to collaborating with the secret computing ecosystem, including collaborators such as NVIDIA and Bosch Research, to increase security, allow smooth training and deployment of confidential AI models, and power the next generation of technology.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective training programs that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com

Comments ()