How GPUs, DPUs, and MLOps are helping Red Hat and NVIDIA advance enterprise AI projects - Course Monster Blog

Artificial intelligence (AI), along with its Machine Learning (ML) and Deep Learning (DL) capabilities, is transforming how modern businesses do business, putting pressure on companies to integrate AI, along with its Machine Learning (ML) and Deep Learning (DL) capabilities, into cloud-native applications to provide more insight and value to their customers and employees. In fact, according to the Red Hat Global Outlook study for 2022, AI/ML is the top emerging technology workload most likely to be addressed in the next 12 months, with 53% of IT leaders questioned naming it as a key priority.

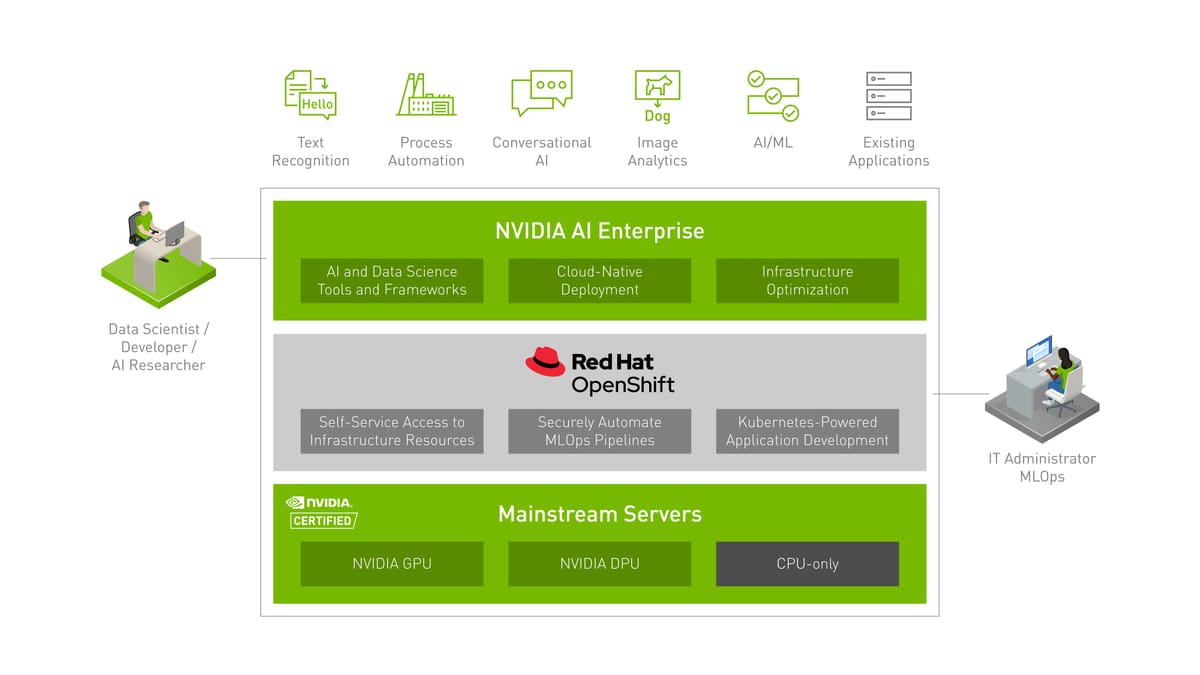

Red Hat is supporting OpenShift on many additional infrastructure footprints to help enterprises create and deploy AI/ML-powered cloud-native apps more rapidly and easily. OpenShift provides a scalable platform for machine learning operations (MLOps), spanning NVIDIA DGX systems, Arm-based public cloud instances, and a new type of data center hardware dubbed NVIDIA BlueField-2 data processing units (DPUs).

In a new curated lab for NVIDIA AI Enterprise accessible on NVIDIA LaunchPad, enterprises may explore Red Hat OpenShift for AI workloads for free.

For many years, Red Hat has collaborated with NVIDIA, most recently as a key member of the new NVIDIA AI Accelerated initiative, which helps partners build world-class AI solutions on the NVIDIA AI platform.

Many capabilities have resulted from this partnership, which assists software developers, IT operators, and data scientists in enterprises all around the world. The full-stack automated operations and self-service provisioning capabilities of Red Hat OpenShift enable teams to collaborate more effectively to move ideas from development to production more rapidly and securely, for both applications and IT infrastructure.

OpenShift capabilities, such as integration with NVIDIA GPUs via the GPU Operator, support for NVIDIA NGC containers, and managing Kubernetes containers with OpenShift over NVIDIA BlueField DPUs, help accelerate data analytics, modeling, inferencing tasks, and help secure networking, from infrastructure planning to day-zero activities to advanced AI model deployments.

MLOps is enabled by bringing OpenShift’s DevOps and GitOps automation capabilities to the whole AI life cycle, allowing greater cooperation between data scientists, ML engineers, software developers, and IT operations. For continuous prediction accuracy, MLOps allows enterprises to automate and simplify the iterative process of incorporating models into software development processes, production rollout, monitoring, retraining, and redeployment.

Today, we’re announcing a significant step forward in enabling MLOps by combining Red Hat OpenShift with the NVIDIA AI Enterprise software package to speed and simplify the creation, deployment, and administration of new AI-powered intelligent apps.

Certification of Red Hat OpenShift with NVIDIA AI Enterprise

NVIDIA AI Enterprise 2.0, a complete portfolio of AI tools and frameworks from NVIDIA, is now certified and supported with Red Hat OpenShift. The combination of Red Hat OpenShift, the industry’s leading enterprise Kubernetes platform, and the NVIDIA AI Enterprise software suite running on NVIDIA-Certified Systems provides a scalable AI platform that helps accelerate a wide range of AI use cases, including DL and ML, across industries, including healthcare, financial services, manufacturing, and more.

To create, deploy, manage, and grow AI-powered cloud-native apps more securely, this solution combines important technologies and support from NVIDIA and Red Hat. This solution, which is available on Red Hat and NVIDIA-certified server systems and virtualization platforms like VMware vSphere running on those servers, allows users to deploy AI workloads uniformly throughout the hybrid cloud, on bare metal or virtualized environments.

This all-in-one solution reduces deployment risk and allows for smooth operational growth. It gives data scientists self-service options for quickly building and sharing AI models before they go live in production. MLOps may also use Red Hat OpenShift’s integrated DevOps capabilities to accelerate the continuous delivery of AI-powered apps.

NVIDIA AI Enterprise with Red Hat OpenShift provides businesses with a comprehensive set of AI tools and frameworks to help them build AI-powered cloud-native apps and high-performance data analytics more quickly. This solution enables businesses to expand new cloud-native workloads on current infrastructure while maintaining enterprise-level management, security, and availability.

Red Hat and NVIDIA have made OpenShift available on NVIDIA LaunchPad for enterprises considering the need for accelerated AI/ML infrastructure on a Kubernetes platform but would like to acquire hands-on lab experience designing, and optimizing, and coordinating resources for AI and data science workloads.

Customers may test OpenShift in a free lab for IT professionals, using the same hardware and software configuration that they can later access or buy, thanks to NVIDIA’s proprietary GPU-accelerated computing infrastructure. Our customers may analyze and explore the Kubernetes and DevOps-powered accelerated AI/ML infrastructure area without sinking into their budgets because Red Hat OpenShift is available on the LaunchPad platform. It also gives them a sneak peek of what they may anticipate seeing in the finished result.

We invite you to try Red Hat OpenShift with NVIDIA AI Enterprise on NVIDIA LaunchPad and benefit from the infrastructure’s simplicity of use, automation, and access to the newest GPU accelerators.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective training programs that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com

Comments ()