Exploring the advantages of managing your microservices architecture with this open-source...

The Confidential Containers Project—what is it?

Using a variety of hardware platforms and technologies, Confidential Containers (CoCo), a new sandbox project of the Cloud Native Computing Foundation (CNCF), provides cloud-native confidential computing. Various hardware and software companies are involved in the project, including Alibaba-cloud, AMD, ARM, IBM, Intel, Microsoft, Red Hat, Rivos, and others.

For improved user data security, the CoCo project integrates software frameworks with cutting-edge hardware security technologies like Intel SGX, Intel TDX, AMD SEV, and IBM Z Secure Execution. This will create a new level of confidentiality that is based on hardware-level cryptography rather than faith in cloud service providers and their workers. CoCo will support a variety of contexts, including edge computing, on-premises systems, and public clouds.

The Confidential Containers or CoCo project wants to standardize secure computing at the container level and improve Kubernetes’ use. This is done to make it possible for Kubernetes users to install private container workloads using well-known processes and tools without having a deep understanding of the underlying private computing technology.

The Trusted Execution Environments (TEE) infrastructure and the cloud-native world are intended to be integrated into the CoCo project. An important element of a confidential computing system is a TEE. TEEs are secure, isolated environments with enhanced isolation that are protected from unauthorized access and alteration while in use by confidential computing (CC) capable hardware. In the context of secret containers, the phrases “enclaves”, “trusted enclaves” or “secure enclaves” are also used interchangeably.

What does Confidential Containers actually mean?

By using Confidential Containers, you may deploy your workload on infrastructure that is owned by another party, greatly lowering the possibility that any unauthorized party will access your workload data and steal your secrets. A cloud provider, a different department within your organization, such as the IT department, or even an unreliable third party may own the infrastructure. The computer’s memory is secured, along with other low-level hardware resources that your task needs, to accomplish this new level of security.

This contains information on the CPU’s current state, physical memory, interrupts, and more. Cryptography-based proofs make it possible to verify that nobody has interfered with your workload, loaded software you didn’t want, read or changed the memory utilized by your workload, inserted a malicious CPU state to try to derail your software, or successfully done anything out of the ordinary. In other words, Confidential Containers helps verify that you will be informed whether either your program runs without being tampered with or it does not.

The initial community release, scheduled for September 2022, will be the main topic of this blog. The objectives of this version and the use cases that are supported are further explained in the following sections. Additionally, it will also provide a summary of the architecture components supported by this release.

Goals of the first release of Confidential Containers

These topics are the main focus of this release:

- Simplicity – deploying and configuring Kubernetes using the CoCo operator, a specialized Kubernetes operator. Our objective is to hide most of the hardware-dependent components to make this technology as accessible as possible.

- Stability – assisting with continuous integration (CI) for the release’s major workflows. They want to make sure that current use cases continue to function normally as the community expands and more contributors sign up.

- Documentation – Instructions on how to deploy and utilize this release are thorough and understandable. There are different acronyms, building blocks, and technological levels in the wide and dynamic world of confidential computing. In order for the users to comprehend and utilize the use cases we explain, we want to give them sufficient information.

Non-goals for the release include:

- All commonly used hardware architectures receive complete support and CI testing.

- Optimization of resources and performance

- Complete integration with third-party services for key brokering or attestation

- All basic Kubernetes tools have full functionality and security features, but some commands, especially those that access sensitive data in the workload, may either not work (being denied for security reasons) or continue to route data through insecure means.

- support for CRIO and contained upstream versions (this release relies on a customized version of containers, which the operator installs for you)

These restrictions should be resolved in later editions, according to Red Hat.

Use cases supported in this release

The following use cases are supported by this release:

- making a sample CoCo workload

- creating a CoCo workload from an encrypted image that already exists

- utilizing a pre-existing encrypted image on hardware that supports Confidential Computing to create a CoCo workload (CC HW)

- the creation of a new encrypted container image and its deployment as a CoCo workload

Some insight into the CoCo architecture

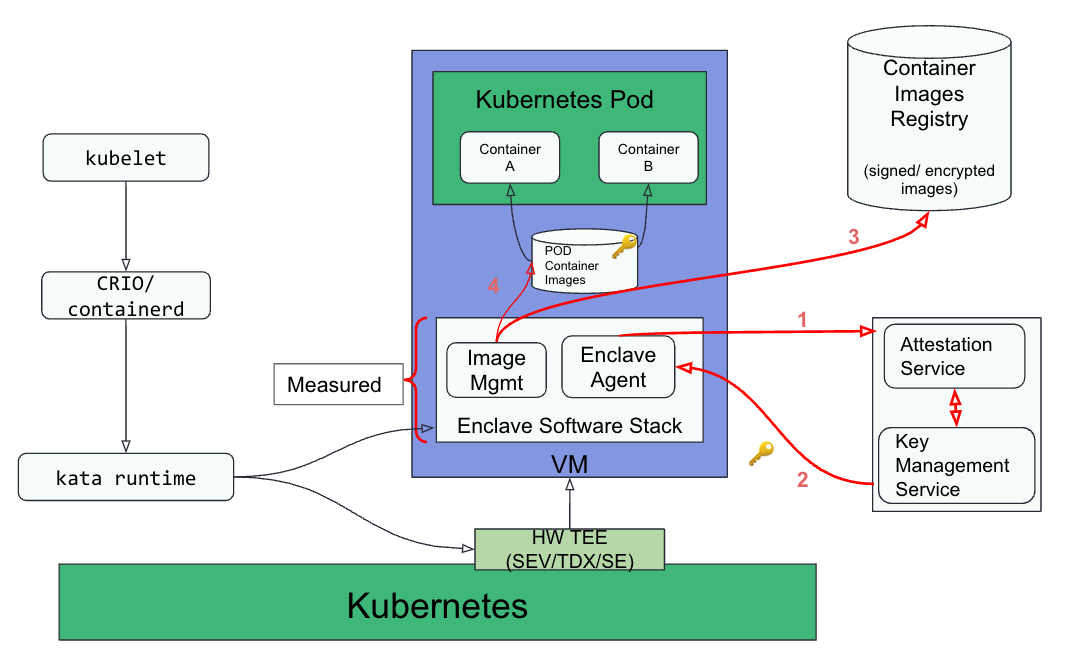

The following diagram gives a high-level overview of the key elements that make up and interact with the CoCo solution:

The CoCo solution integrates an Enclave software stack engine and a Kubernetes pod inside a virtual machine (VM). A VM-based TEE and a Kubernetes pod are linked one to one (or enclave). The container images, which can either be signed or encrypted, are stored inside the enclave.

The enclave software stack is measured, which means that the content of the stack is verified by a trustworthy cryptographic procedure. The enclave agent, which is in charge of starting attestation and obtaining the secrets from the key management service, is included in it.

The container image registry and the relying party, which combines the attestation service and key management service, are supporting elements of the system.

Encrypted container images must be kept and sent by the container image registry. Layers are usually included in container images, and each layer is individually encrypted. For the workload to be effectively protected, at least one layer must be encrypted.

The measurement of the enclave software stack must be compared to a list of authorized workloads for the attestation service to approve or disapprove the distribution of keys.

Storage of secrets that the workload requires to function, including disk decryption keys, and delivery of these secrets to the enclave agent are the responsibilities of the key management service.

Red Hat can then sum up the path CoCo takes in the following four steps (shown in red in the picture above) after the VM has been launched:

- The enclave agent contacts the attestation service with a request. The agent must use the measurement of the enclave to answer the attestation service’s cryptographic challenge and verify the identity of the workload. The attestation service alerts the key management service that secrets can be delivered if the enclave agent successfully completes this task.

- The key management service locates the secrets associated with the workload and provides them to the agent if the workload is permitted to operate. The decryption keys for the utilized disks are among the secrets that are needed.

- The container images registry is used by the image management service inside the enclave to download container images, which it then verifies and locally decrypts to store in encrypted form. The enclave can then use the container images at that time.

- The enclave software stack generates a pod and begins operating containers inside the virtual machine (all containers within the pod are secured).

Why is Red Hat investing in this project?

Technology advancements enable our customers to provide an additional layer of encryption to further secure the data being used as part of the transition to an open hybrid cloud and in an effort to combine its important security features. There are several very sensitive industries that require extra security for their job. Red Hat plans to offer the essential tools as regulatory environments change.

Red Hat thinks that cloud service providers and software developers might significantly benefit from secret computing. It keeps the processes simple while integrating the benefits of the cloud and on-premise.

CoCo makes it simpler to use these cutting-edge technologies. It is expected that Red Hat OpenShift, which is based on Kubernetes, will play a significant role in expanding the company’s product line, thanks to its ability to transparently integrate confidential containers.

Want to know more about Red hat? Visit our course now.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective training programs that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com