Many businesses that have chosen to modernize application development by adopting a cloud-native...

Red Hat OpenShift Service deployment on AWS

Red Hat OpenShift Service is a popular choice for many companies as their standard platform for developing and operating all their applications. This helps them avoid a complex, heterogeneous environment and simplifies their operations. With Red Hat OpenShift, they can not only build new cloud-native applications but also migrate their legacy ones to them.

OpenShift offers a major advantage by allowing developers to only need to be familiar with one interface while hiding the complexities of the platform. This can lead to significant improvement in productivity.

Red Hat OpenShift Service on AWS (ROSA)

Some customers who choose OpenShift go a step further in simplifying their setup. They want to eliminate the need to worry about providing and managing the infrastructure for their clusters. Instead, they want their teams to focus solely on developing applications and to be productive right from the start. For these customers, Red Hat OpenShift Service on AWS (ROSA) is a viable option.

ROSA operates entirely on the Amazon Web Services (AWS) public cloud and is jointly managed by Red Hat and AWS. The control plane and compute nodes are fully managed by a team of Red Hat Site Reliability Engineers (SREs), with support from both Red Hat and Amazon. This includes installation, management, maintenance, and upgrades for all nodes.

Want to know more about Red Hat? Visit our course now.

Deployment options for Red Hat OpenShift Service

There are two main options for deploying ROSA: in a public cluster or in a private link cluster. In either case, it is recommended to deploy across multiple availability zones for increased resiliency and high availability.

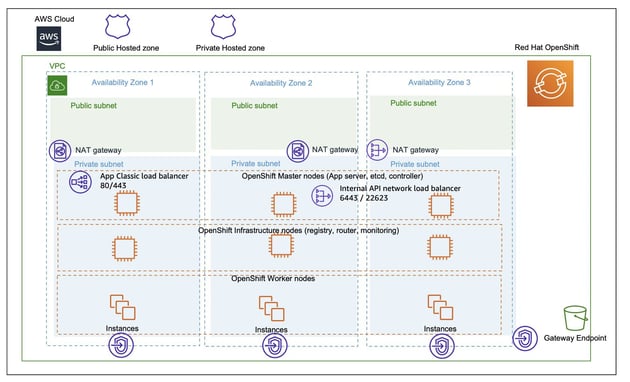

Public clusters are typically used for workloads without stringent security needs. These clusters are deployed in a Virtual Private Cloud (VPC) within a private subnet, which houses the control plane nodes, infrastructure nodes, and worker nodes where applications run. Although the cluster is still accessible from the internet, a public subnet is also required in addition to the VPC.

AWS load balancers (Elastic and Network Load Balancers) deployed in the public subnet enable both the Site Reliability Engineering (SRE) team and users accessing the applications (ingress traffic to the cluster) to connect. For users, a load balancer redirects their traffic to the router service on the infrastructure nodes, which then forwards it to the desired application running on a worker node. The SRE team uses a separate AWS account to connect to the control and infrastructure nodes through various load balancers.

Figure 1. ROSA public cluster

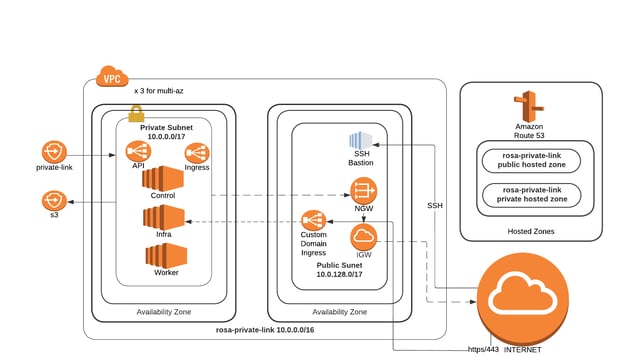

For production workloads with more stringent security requirements, a PrivateLink cluster is recommended. In this case, the Virtual Private Cloud (VPC) housing the cluster only has a private subnet and is inaccessible from the public internet.

The Site Reliability Engineering (SRE) team uses a separate AWS account that connects to an AWS Load Balancer through an AWS PrivateLink endpoint. The load balancer then redirects traffic to the control or infrastructure nodes as necessary. (Once the AWS PrivateLink is established, the customer must approve access from the SRE team’s AWS account.) Users connect to an AWS Load Balancer, which directs their traffic to the router service on the infrastructure nodes, and eventually to the worker node where the desired application is running.

In PrivateLink cluster setups, customers often redirect egress traffic from the cluster to their on-premise infrastructure or to other VPCs in the AWS cloud. This can be done using an AWS Transit Gateway or AWS Direct Connect, eliminating the need for a public subnet in the VPC housing the cluster. Even if egress traffic needs to be directed to the internet, a connection (through the AWS Transit Gateway) can be established to a VPC with a public subnet that has an AWS NAT Gateway and an AWS Internet Gateway.

Figure 2. ROSA private cluster with PrivateLink

In both public and PrivateLink deployments, the cluster can interact with other AWS services by utilizing AWS VPC endpoints to communicate with the desired services within the VPC where the cluster resides.

Connecting to the cluster

The preferred method for the SRE team to access and perform administrative tasks on the ROSA clusters is through the use of AWS Security Token Service (STS). The principle of least privilege should be applied, providing only the necessary roles for a specific task. The token provided is temporary and for one-time use, so if a similar task needs to be done later, a new token must be obtained.

The AWS Security Token Service (STS) is also used when connecting the ROSA cluster to other AWS services, such as EC2 for spinning up new servers or EBS for persistent storage needs.

Summary

Regardless of the type of customer, incorporating DevOps practices and modernizing application deployment through an enterprise Kubernetes platform like OpenShift is beneficial. While customers have the option to host it on-premise and manage it themselves, they can also opt for ROSA which is hosted on the AWS public cloud. ROSA’s ability to interact with a wide range of AWS services enhances the overall value customers can derive from their platform.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective IT Certifications that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com