CIO responsibilities have been greatly influenced by software-fueled changes in the competitive landscape over the past ten years, along with global disruptions and consumer demands for safe, always-on digital experiences. Executive-level vision is now more important than ever as innovation speed rises, application delivery complexity increases, and attack surfaces change. Modern CIOs are in charge of making these important decisions in a world where one action, whether good or bad, can have a big impact. The statement “There’s no worse moment to be an average CIO” was made frankly by McKinsey Digital.

From IT Leader to Growth Driver: A New Breed of CIO

The battle to create a successful multi-cloud strategy and entice top personnel is fierce, but the chances of succeeding in the application economy are limited, and top CIOs aren’t waiting around. Instead, they are approaching tech-driven growth with the knowledge that customer experience (CX) and developer experience (DevEx) are inextricably linked, and that you cannot have one without the other. As a result, a new generation of CIOs is the advantage of multi-cloud is clear as innovation picks up speed. No other arrangement enables businesses to effectively scale, reduce reliance on any one vendor, and increase organizational resilience while using the distinct attributes of many cloud providers. However, contemporary CIOs are aware of the challenges associated with managing multi-cloud settings, and they welcome platforms that not only provide real-time health insight across multiple clouds but also watch for and alert to security anomalies in such environments. These solutions also enable shared visibility for security, operations, and development teams, encouraging collaboration important sign of DevEx-focused settings. laser-focused on developing teams, resources, and procedures that push the envelope.

Reaching for the Clouds

The advantage of multi-cloud is clear as innovation picks up speed. No other arrangement enables businesses to effectively scale, reduce reliance on any one vendor, and increase organizational resilience while using the distinct attributes of many cloud providers. However, contemporary CIOs are aware of the challenges associated with managing multi-cloud settings, and they welcome platforms that not only provide real-time health insight across multiple clouds but also watch for and alert to security anomalies in such environments. These solutions also enable shared visibility for security, operations, and development teams, encouraging collaboration important sign of DevEx-focused settings.

Innovation on the fly

Since CIOs have firsthand experience with how disruptive global crises can be, it is more important than ever to be prepared. At the moment, the globe is experiencing many crises on a variety of geopolitical and health-related fronts. Lessons learned from extreme lifts and shifts—some beneficial, some disastrous—serve as a barometer for how crucial it is to create a resilient company. Future-oriented CIOs aren’t concerned with getting teams back to the office in a world that has been irrevocably altered by the forced migration to remote work. They are putting even more focus on portable, secure IT environments that enable work from anywhere, and they are working with recruitment counterparts to make the most of this feature to bring in and keep top talent for all levels of their technological ecosystem.

Here at CourseMonster, we know how hard it may be to find the right time and funds for training. We provide effective training programs that enable you to select the training option that best meets the demands of your company.

For more information, please get in touch with one of our course advisers today or contact us at training@coursemonster.com

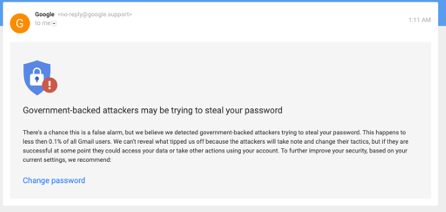

Usually, they will provide a gateway via which to access useful administration tools for customizing scan criteria and reporting. These interfaces are useful, but they only provide insights into what they can, not the entire Dark Web.

Usually, they will provide a gateway via which to access useful administration tools for customizing scan criteria and reporting. These interfaces are useful, but they only provide insights into what they can, not the entire Dark Web. Individuals with this expertise are typically reformed hackers, police enforcement, military, and intelligence officials. The challenge you have here, and this is the value system, is that if a person has acquired the trust of the inner sanctum of the clandestine trading floors, they have done so through questionable activities. As a result, You find it difficult to think they have the necessary fundamental integrity. You’ve seen examples when trade analysts behave as double agents, switching sides depending on who pays the most, and issues where they function as both a buyer and a seller on the same transaction.

Individuals with this expertise are typically reformed hackers, police enforcement, military, and intelligence officials. The challenge you have here, and this is the value system, is that if a person has acquired the trust of the inner sanctum of the clandestine trading floors, they have done so through questionable activities. As a result, You find it difficult to think they have the necessary fundamental integrity. You’ve seen examples when trade analysts behave as double agents, switching sides depending on who pays the most, and issues where they function as both a buyer and a seller on the same transaction.![What is Data Sovereignty & GDPR? [Data Storage Location Laws]](https://www.archive360.com/hubfs/key%20to%20data.jpg)